Log-Wrangling 101: 7 Tips for Managing Your SQL Server Transaction Logs

In my last post, I broke down the parts of the anatomy of the SQL Server transaction log. In this post, I will share a few tips for keeping your transaction logs well-maintained and your SQL Server databases happy and healthy.

Here are 7 important tips for managing your transaction logs:

- Select the optimal recovery model and backup strategy for your database.

- Configure Auto-Growth setting for optimal performance.

- Configure the Max File Size of the transaction log.

- Store log files on a separate drive from data files.

- Minimize the use of log shrinking.

- Use only one log file.

- Monitor log file size and growth regularly.

Now, let’s break these down one at time.

Recovery Model and Backups

First, the #1 factor to consider in managing SQL Server transaction logs is the recovery model settings of your databases. Additionally, you must consider the correlated backup processes required for recovery for each model.

In a recent post, we explained that if a database is in Simple Recovery mode, SQL Server will take full or differential backups as scheduled. In this mode, however, the database can only be restored to the point of the last backup. Transaction logs are flushed automatically by SQL Server as data is committed, so there is no log maintenance or transaction log backup required. This is a good strategy for non-critical databases where your recovery point objective can be the point of the last full or differential backup.

By contrast, databases in Full or Bulk-Logged Recovery mode rely on the DBA to maintain the logs. Administrators must ensure that they are truncated frequently enough by a transaction log backup to prevent them from filling up. A common practice used by many DBAs is to use Ola Hallengren’s scripts for establishing the backup chain. Additionally, a common cadence is to perform weekly full backups, daily differential backups, and transaction log backups every 15 minutes.

Auto-Growth Setting

The Auto-Growth setting tells SQL Server how much to grow the log file each time that it needs to expand. The size of this growth should not be too small, since log file growth operations are slow and can impact query performance. This impact is due to the fact that log files have been historically unable to make use of the instant file initialization (IFI) option.

Notably, there have been some improvements in this area if you are on SQL Server 2022. Specifically, fewer VLFs are created internally by SQL Server logging processes. Additionally, the transaction log can make use of IFI if it is enabled under the normal requirements and auto-growth is not set to more than 64 MB (the default setting for 2022). (More below*)

For earlier editions, however, transaction logs cannot use IFI. Therefore, using a larger auto-growth setting of 256 MB to 1024 MB (or more) can be beneficial for performance.

Additionally, setting the initial size of the log file to 20-30% of the initial data file size is a good practice. Pay particular attention to the initial size for the tempdb log file. Tempdb will always be shrunk to its initial size after a restart and will have to re-grow to a standard size. So, you do not want this setting to be unreasonably small to start.

How to Adjust in SSMS

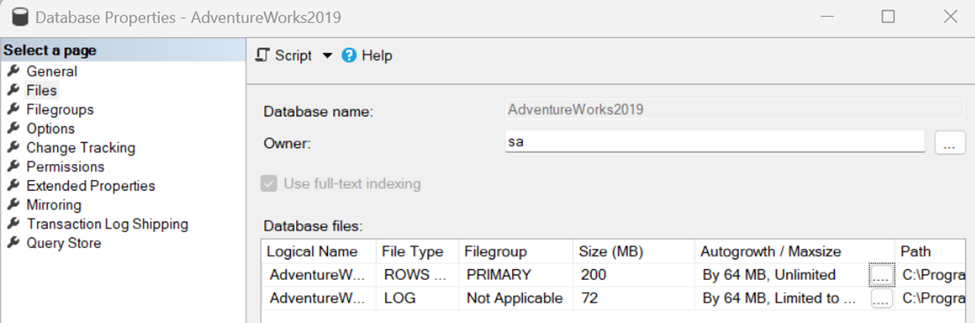

Right click on the database and navigate to the properties tab. Click on Files in the list on the left.

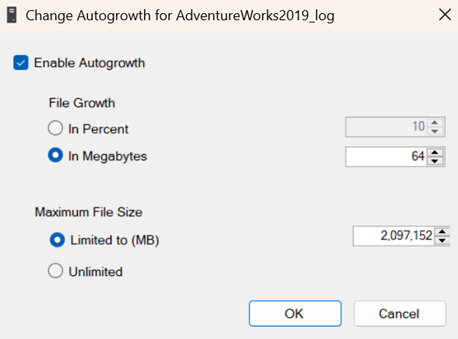

Then click on the ellipsis next to the log file,

and change the File Growth to the appropriate value.

You can also run the below query to update the setting:

ALTER DATABASE [YourDB] MODIFY FILE (name = 'YourDB', FILEGROWTH=256MB);

Max File Size Setting

Configuring the Max File Size setting is not necessary for all databases. However, doing so can be useful for databases where there is a reasonable likelihood that there could be a runaway query or process that could cause the log to grow to the point that it consumes all space on the server and causes SQL Server to fail.

If this setting is in place in such an event, SQL Server will be able to read but unable to commit transactions and will throw errors. It will not, however, fill the entire server and cause SQL Server to fail.

How to Adjust in SSMS

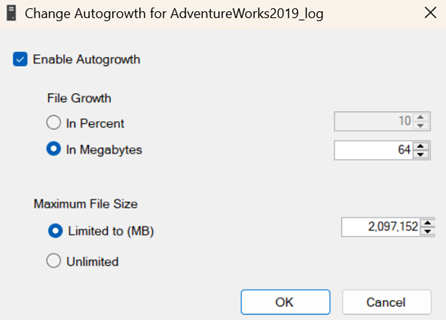

Follow the same directions as above, and in the Autogrowth window, change the Maximum File Size:

Or use this query:

ALTER DATABASE [YourDB] MODIFY FILE (name = 'YourDB_logfilename', SIZE=64000MB);

Log Storage

Best practice is to isolate your log files on a separate physical drive from your data files for optimal performance. This is to prevent the intensive sequential write workload required for the logs from interfering with the random write workload used for the data files.

Log Shrinking

Occasionally, we will see databases with log shrinking employed as a part of routine maintenance or the Auto-Shrink feature turned on. Both should be avoided.

Log shrinking should be reserved for occasions when there is a large amount of unused log space or when bulk operations may have caused the log to grow more than it ordinarily would. (Note that databases that see many bulk operations may benefit from the Bulk-Logged recovery model setting, which suspends logging during qualifying bulk operations.)

Using Auto-Shrink should only be done with caution and full awareness of the implications, since it can lead to significant performance issues. Microsoft’s documentation does a good job of outlining the feature and its implications.

How to Identify and Shrink Appropriate Logs in SSMS

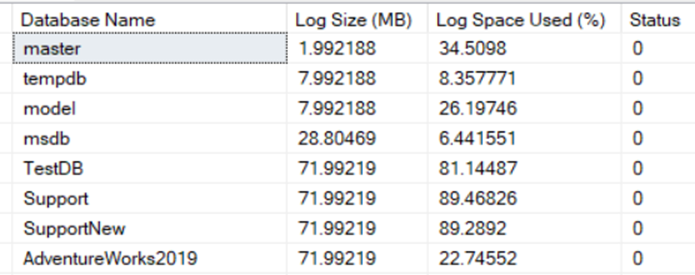

Obtain information about log space consumption using:DBCC SQLPERF(logspace)

If necessary, files with an unusually large percentage of unused log space can be shrunk using:

DBCC shrinkfile(YourDB_log, 1024) -- shrink log to 1 GB

The appropriate size to which to shrink the log will depend on the database. Shrinking to an average log size makes sense. (See below for information about obtaining this.**)

Use One Log File

Only set up multiple log files for a database in unique situations where space is limited (like in the case of a full log drive). If this is required, you should remedy this situation as soon as possible.

Understand that setting up multiple log files does not allow SQL Server to write to them in parallel and does not improve performance. In fact, having multiple large log files will significantly slow down your recovery if you need to restore your database in a DR scenario.

Monitor Regularly

Once you have your databases configured according to best practices and your maintenance processes established, your transaction logs could easily operate without issue for years!

It is still good practice, however, to monitor the log file size, growth, and performance for issues before they have a chance to escalate to crisis events.

There are multiple tools and services on the market that can assist with this, including our own SEROShield, but you can also monitor with reports that provide insight through queries like the following:

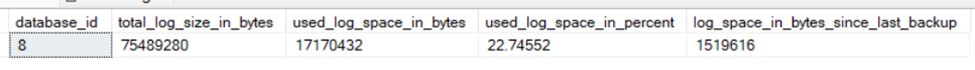

Monitoring Log Usage

DBCC SQLPERF(logspace)

SELECT * FROM sys.dm_db_log_space_usage

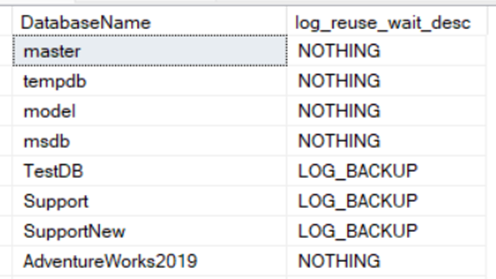

Why a Log is Awaiting Truncation

SELECT [name] as DatabaseName, [log_reuse_wait_desc] FROM sys.databases

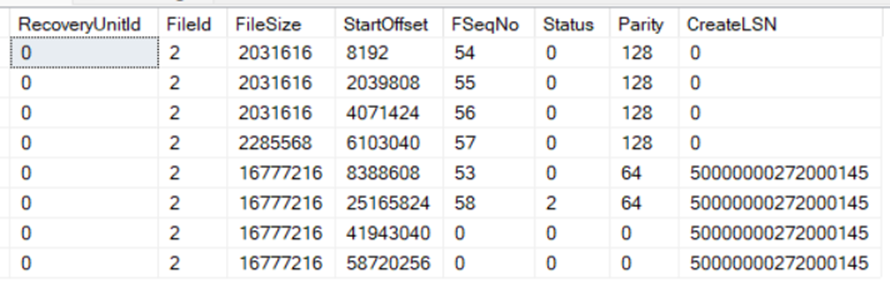

Monitoring Virtual Log Files (VLFs)

DBCC LOGINFO

Want to learn more?

Here are a few more resources about some of the topics we touched on:

- *SQL Server 2022 Changes – a very interesting demo of the new functionality

- Instant File Initialization (IFI) and the Transaction Log – Microsoft documentation

- More Information and Best Practices

- Transaction Log Growth Triage – a nice post on triaging a transaction log growth event

- **Tracking Log Usage – a process for tracking log usage over time

- More on Monitoring

- Virtual Log Files (VLFs)

Your SQL log file configuration can affect performance. It can also affect the recoverability of key databases. If you’re concerned about your log file configuration, we’re happy to help. Contact us to set up a short call to discuss.